Technology

[Rumor] Galaxy S24’s possible Snapdragon chip outperforms Apple’s A16 Bionic

Qualcomm’s Snapdragon 8 Gen 2-powered Android smartphones debuting in the market continuously. The company may expand its Snapdragon portfolio with 8 Plus Gen 2 in H2, while, rumors about the next-gen chip started to emerge.

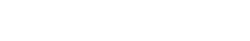

According to a wild rumor, Qualcomm’s next Snapdragon processor will give tough competition to Apple’s Bionic silicon. An alleged Snapdragon 8 Gen 3 prototype was tested on Geekbench and the results are just mind-blowing for the Android vendors camp, including Samsung.

Follow our socials → Google News, Telegram, Twitter, Facebook

Similar to the Galaxy S23, Samsung would use an exclusive Snapdragon 8 Gen 3 for Galaxy SoC for the Galaxy S24 flagships. Meanwhile, the benchmark scores of vanilla 8 Gen 3 reportedly revealed a single-core score of 1,930 and a multi-core score of 6,236, surpassing Apple’s A16 Bionic.

“Alleged 8 Gen 3 chip has 29% increase in single-core performance and 20% in multi-core.”

It’s worth mentioning that the Android devices powered by Snapdragon 8 Gen 2 secured average scores of 1,491 for single-core and 5,164 for multi-core. If the leaked benchmark results are true, it’s going to be a great year for Android smartphones launching with 8 Gen 3.

Besides, Qualcomm’s next Snapdragon processor is rumored to bring a unique 1+5+2 core configuration, dropping one more efficiency core from its predecessor’s 1+4+3 setting. Still, it’s likely that it would be 20% more power-efficient than the Snapdragon 8 Gen 2, thanks to TSMC’s N4P process node.

Since we are about 10 months away from the tentative launch of the Snapdragon 8 Gen 3 processor, it’s not fair to blindly believe this rumor. The company may change its plan anytime and make amendments until the finalization of the chipset. Therefore, stay connected for more input.

Snapdragon 8 Gen 2 for Galaxy is the brain behind Samsung Galaxy S23 series

Photography

Samsung Space Zoom camera uses AI, is it controversial?

Samsung Galaxy S Ultra phones are monsters when it comes to camera capabilities. Enriching the hardware, Samsung Galaxy devices deliver great Space Zoom camera experiences using algorithm codes, AI, and machine learning. Artificial Intelligence is being used everywhere, which shouldn’t be controversial.

Follow our socials → Google News, Telegram, Twitter, Facebook

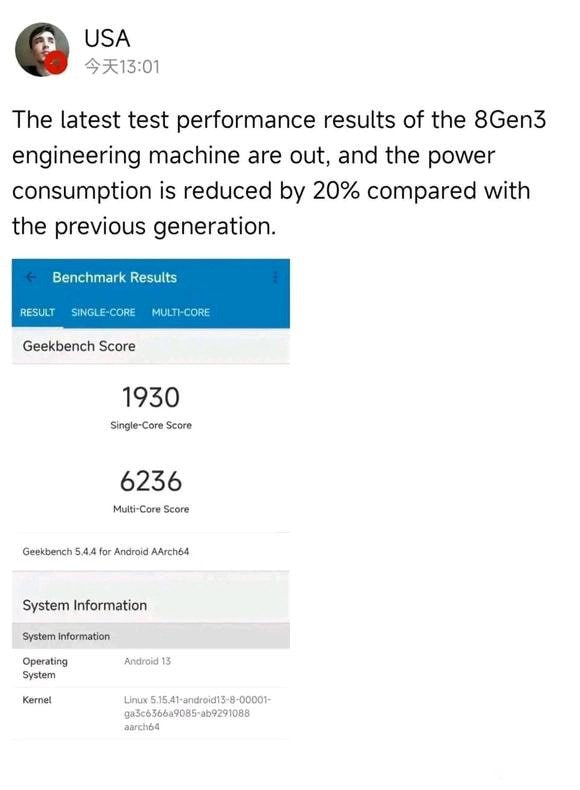

Recently, a user-generated Reddit post has become a controversial thread, claiming that Samsung is cheating with Space Zoom and the Moon shots by using AI. The user used an image of the Moon, went some steps backward, and captured the displayed Moon to check what happens.

Samsung Galaxy Camera uses AI to upscale the image of the Moon so it looks clearer in images. To do so, the company has applied an intelligent method, which consists of shots of a real Moon in all of its shapes. Machine learning helps the shot to be nourished further during processing.

In his Reddit post, the user said that he has downloaded a high-resolution image of the Moon from the internet, downscaled it to 170×170 pixels, and applied a gaussian blur. That way, the user removed all the details of the image of the Moon downloaded from the web.

This technical yet tricky procedure further added the upscaling of the image by 4 times so the phone can consider it as a long-distance natural Moon. The user later full-screened the image on the monitor, turned the room dark, and captured the screen through a Samsung phone from a distance.

The Samsung phone identified the displayed moon as the real Moon and the Artificial Intelligence refined the shot and delivered a clear and vibrant image of the Moon. For the user, it might be the first time to see the AI technique as generally we don’t try to test the camera and space zoom.

There’s nothing like cheating nor Samsung phones are producing fake Moon images. The company already revealed that an intelligent camera technique and different kinds of machine learning work behind the shot so user can get the most adorable variant in Space Zoom.

AI is being used everywhere. What do you think, is Samsung really cheating with Space Zoom? Let us know through Twitter.

Read more:

Technology

Samsung Space Zoom Camera Technology (Moon Shot)

Story created in Dec. 2022 | Samsung ships the best camera phones in the industry, and the latest beast is Galaxy S23 Ultra. Thanks to the innovative Telephoto camera sensor and software optimization, it’s now possible to capture images of the Moon, with just a Samsung Galaxy smartphone.

Follow our socials → Google News, Telegram, Twitter, Facebook

Today, we discuss how your Samsung phone’s Space Zoom photography feature works. Regardless, hardware is the key factor to introduce a huge zooming/magnification range, but, well-optimized software significantly improves the overall experience. That’s what Samsung does, others not.

Way back in 2019, Samsung introduced AI technology to the mobile camera when it launched the Galaxy S10 series. The company developed a Scene Optimizer feature that helps AI to recognize the subject and provide you with the best photography experiences regardless of time and place.

Specifically for Moon photography, Samsung Galaxy S21 series debuted even better optimization with artificial intelligence. Galaxy flagships starting the Galaxy S21 series recognize Moon as the target using learned data, multi-frame synthesis, and deep learning-based AI technology at the time of the shooting.

In addition, a detail improvement engine feature has also been applied, which makes the picture clearer. If you don’t want to capture photos using AI technology, you can do it by just disabling the Scene Optimizer from the camera viewfinder.

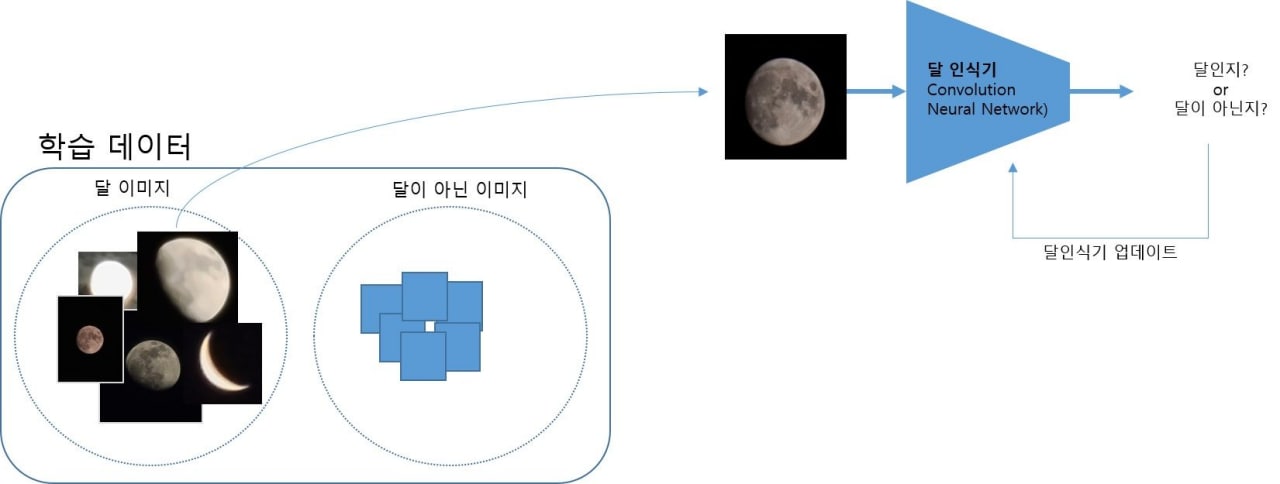

Data learning process for Moon recognition engine

Samsung developers created the Moon recognition engine by learning various shapes of the Moon from full to crescent, based on images that people actually see with their eyes on Earth. It uses an AI deep learning model to show the presence and absence of the moon in the image and the area (square box) as a result.

Moreover, the pre-trained AI models can detect lunar areas even if other lunar images that have not been used for training are inserted. However, the Moon can’t be normally recognized if it is covered by a cloud or something else (non-lunar planets).

Brightness control process

Well, AI enhances photography of the Moon, but better results require powerful hardware part as well. As it’s difficult to detect a small Moon in regular AI photography, the Moon’s photography is possible from 25x zoom or higher.

As soon as your Galaxy phone’s camera recognizes the Moon in the high-magnification zoom, the brightness of the screen is controlled to be dark so that the Moon can be seen clearly on the camera viewfinder, alongside maintaining optimal brightness.

If you shoot the Moon in the early evening, the sky around that is not the color of the sky you see with your eyes, but it is shot as a black sky. The tech behind this function is that when the Moon is recognized, it changes the focus to infinity to keep the moon in focus.

Shake control

Starting the Galaxy S20 series, Samsung shipped the Ultra variants (S20 Ultra/S21 Ultra/S22 Ultra) with the capability of magnification up to 100x. When the user shoots the Moon with 100x zoom/magnification, it looks greatly magnified, but it is not easy to shoot because the screen shakes due to shake of hands.

For this, Samsung created a functionality called Zoom Lock that reduces shaking when the Moon is identified so it can be stably captured on your Galaxy phone’s screen without a tripod. Zoom Lock unites OIS and VDIS technology in order to maximize image stabilization to radically surpass shaking.

If you touch the screen at high magnification or shine the Moon without moving for more than 1.5 seconds to lock, the zoom map border (in the upper right of the screen) changes from white to yellow, and the Moon no longer shakes, making it easy to shoot.

Tips to take Moon shots in 100x zoom:

- After rotating the Galaxy in landscape mode, hold the phone with both hands.

- Check the position of the Moon through the zoom map and adjust it to the center of the screen.

- When the zoom map border changes to yellow, press the capture button to picture the Moon.

Learning process of lunar detail improvement engine

Once the Moon is visible at the proper brightness, your Galaxy Camera completes a bright and clear moon picture through several steps when you press the capture button. At first, the application double-checks whether the Moon detail improvement engine is required or not.

Second, the camera captures multiple photos and synthesizes them into a single shot that is bright and noise-reduced via Multi-frame Processing. Due to the long distance and lack of light in the environment, it was not enough to give the best image even after compositing multiple shots.

To overcome this, the Galaxy Camera uses a deep learning-based AI detail enhancement engine (Detail Enhancement technology) at the final stage to effectively remove noise and maximize the details of the Moon to complete a bright and clear picture.

Moon filming process

Samsung’s stock Camera application utilizes various AI technologies, which are detailed above, to provide clear Moon photos through various processes. Once focussed, AI automatically realizes the scene being shot and uses scene optimization technology to adjust settings for Moon quality.

To make Moon photography stable and easier, the Zoom Lock function, an image stabilization function, is used to provide clear moon photos on the preview screen. Once the Moon is positioned within the screen in the desired composition, press the shooting button to start shooting.

At this time, the Galaxy Camera combines multiple photos into a single photo to remove noise. As result, a bright and clear moon picture is completed by applying detail improvement technology that brings out the details of the moon pattern.

Update – March 12.

Samsung itself revealed that multiple processing techniques work before the Galaxy phone produces a crispy image of the Moon. Meanwhile, there’s a thread posted on Reddit that makes the shooting technology controversial, which shouldn’t be there.

Technology

Samsung appoints ex-TSMC expert as VP of chip packaging team

Lin Jun-cheng, who previously worked at Taiwan-based TSMC has joined Samsung Electronics, reports say. The company appointed Lin as Senior Vice President of the advanced packaging team under Samsung’s chip business division, Device Solutions.

In addition, the KoreaHerald report believes that Lin Jun-cheng’s key responsibility is likely to be overseeing the development of cutting-edge packaging technology, which plays a key role in enhancing advanced higher-performance chipsets.

Follow our socials → Google News, Telegram, Twitter, Facebook

From 1999 to 2017, Lin worked at TSMC and then served as CEO of Taiwanese semiconductor equipment maker Skytech. It shows how Samsung beefs up efforts to strengthen its prowess in chip packaging under the leadership of Samsung’s co-CEO Kyung Kye-hyun.

Galaxy-exclusive chipset

In the recent past, the South Korean tech giant established a dedicated team as part of research and development on its dream chipset. To speed up the development and accuracy of the project, the company is continuously putting in efforts and hiring expertise from various tech titans.

A task force is also said to have been launched last year to speed up the commercialization of advanced packaging technology. Last year, Samsung also hired Kim Woo-pyeong, a former engineer at Apple – another archrival – as head of the company’s packaging solution center in the US.